Generative AI applications generate new content, such as text, images, videos, music, and other forms of media, based on user inputs. These systems learn from vast datasets containing millions of examples to recognize patterns and structures, without needing explicit programming for each task. This learning enables them to produce new content that mirrors the style and characteristics of the data they trained on.

AI-powered chatbots like ChatGPT can replicate human conversation. Specifically, ChatGPT is a sophisticated language model that understands and generates language by identifying patterns of word usage. It predicts the next words in a sequence, which proves useful for tasks ranging from writing emails and blogs to creating essays and programming code. Its adaptability to different writing and coding styles makes it a powerful and versatile tool. Major tech companies, such as Microsoft, are integrating ChatGPT into applications like MS Teams, Word, and PowerPoint, indicating a trend that other companies are likely to follow.

Despite their utility, these generative AI tools come with privacy risks for students. As these tools learn from the data they process, any personal information included in student assignments could be retained and used indefinitely. This poses several privacy issues: students may lose control over their personal data, face exposure to data breaches, and have their information used in ways they did not anticipate, especially when data is transferred across countries with varying privacy protections. To maintain privacy, it is crucial to handle student data transparently and with clear consent.

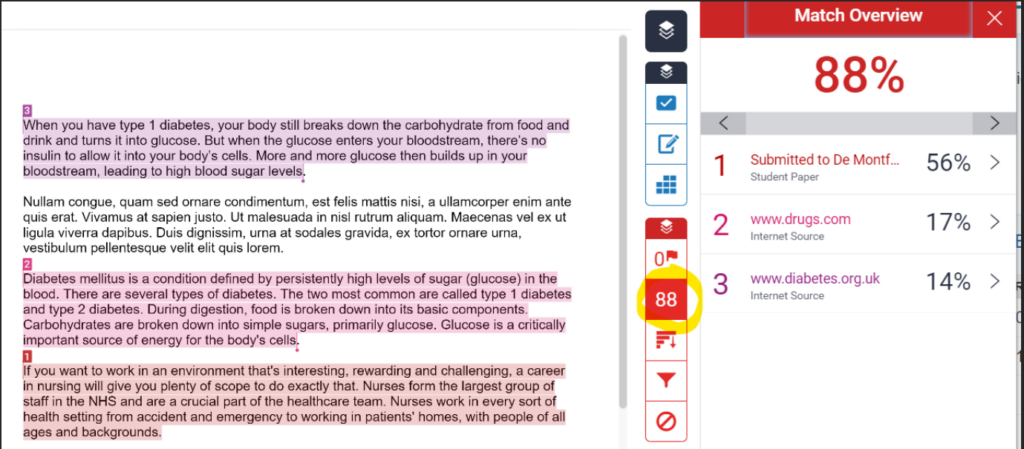

Detection tools like Turnitin now include features to identify content generated by AI, but these tools also collect and potentially store personal data for extended periods. While Turnitin has undergone privacy and risk evaluations, other emerging tools have not been similarly vetted, leaving their privacy implications unclear.

The ethical landscape of generative AI is complex, encompassing data bias concerns that can result in discriminatory outputs, and intellectual property issues, as these models often train on content without the original creators’ consent. Labour practices also present concerns: for example, OpenAI has faced criticism for the conditions of the workers it employs to filter out harmful content from its training data. Furthermore, the significant environmental impact of running large AI models, due to the energy required for training and data storage, raises sustainability questions. Users must stay well-informed and critical of AI platform outputs to ensure responsible and ethical use.

This article is part of a collaborative Data Privacy series by Langara’s Privacy Office and EdTech. If you have data privacy questions or would like to suggest a topic for the series, contact Joanne Rajotte (jrajotte@langara.ca), Manager of Records Management and Privacy, or Briana Fraser, Learning Technologist & Department Chair of EdTech

A bit about how AI classifiers identify AI-generated content

A bit about how AI classifiers identify AI-generated content

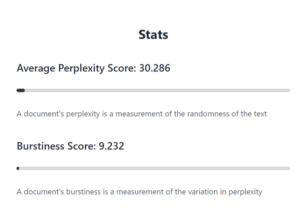

ChatGPT is underpinned by a large language model that requires massive amounts of data to function and improve. The more data the model is trained on, the better it gets at detecting patterns, anticipating what will come next and generating plausible text.

ChatGPT is underpinned by a large language model that requires massive amounts of data to function and improve. The more data the model is trained on, the better it gets at detecting patterns, anticipating what will come next and generating plausible text. Interested in testing out commonly-availabe AI detection tools, including Turnitin, GPTZero, OpenAI’s AI Text Classifier, and ZeroGPT. Join us to create a variety of human-generated, AI-generated, and mixed content and run the content through various detection tools to compare the results.

Interested in testing out commonly-availabe AI detection tools, including Turnitin, GPTZero, OpenAI’s AI Text Classifier, and ZeroGPT. Join us to create a variety of human-generated, AI-generated, and mixed content and run the content through various detection tools to compare the results.