AI classifiers don’t work!

Natural language processor AIs are meant to be convincing. They are creating content that “sounds plausible because it’s all derived from things that humans have said” (Marcus, 2023). The intent is to produce outputs that mimic human writing. The result: The world’s leading AI companies can’t reliably distinguish the products of their own machines from the work of humans.

In January, OpenAI released its own AI text classifier. According to OpenAI “Our classifier is not fully reliable. In our evaluations on a “challenge set” of English texts, our classifier correctly identifies 26% of AI-written text (true positives) as “likely AI-written,” while incorrectly labeling human-written text as AI-written 9% of the time (false positives).”

A bit about how AI classifiers identify AI-generated content

A bit about how AI classifiers identify AI-generated content

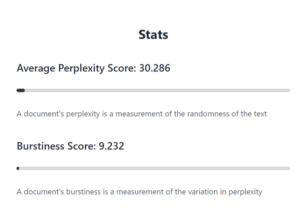

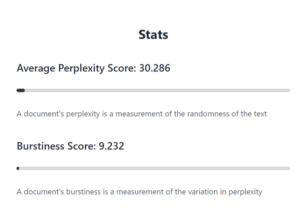

GPTZero, a commonly used detection tool, identifies AI created works based on two factors: perplexity and burstiness.

Perplexity measures the complexity of text. Classifiers identify text that is predictable and lacking complexity as AI-generated and highly complex text as human-generated.

Burstiness compares variation between sentences. It measures how predictable a piece of content is by the homogeneity of the length and structure of sentences throughout the text. Human writing tends to be variable, switching between long and complex sentences and short, simpler ones. AI sentences tend to be more uniform with less creative variability.

The lower the perplexity and burstiness score, the more likely it is that text is AI generated.

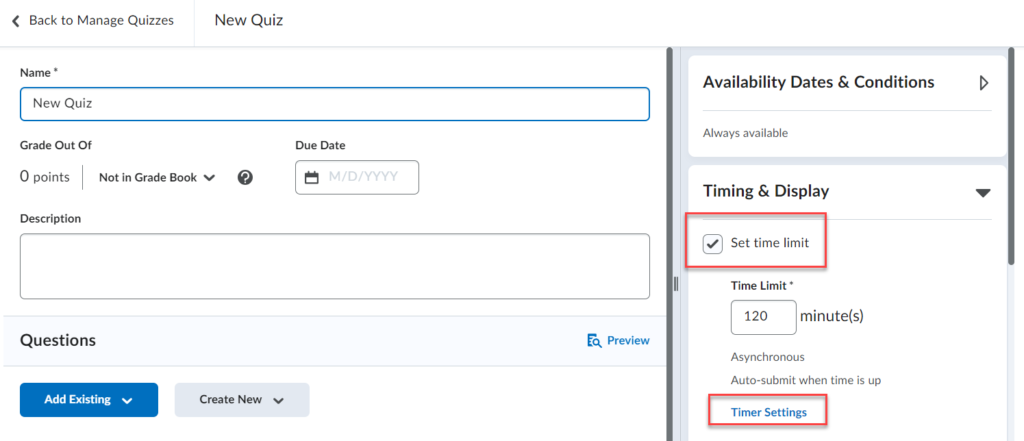

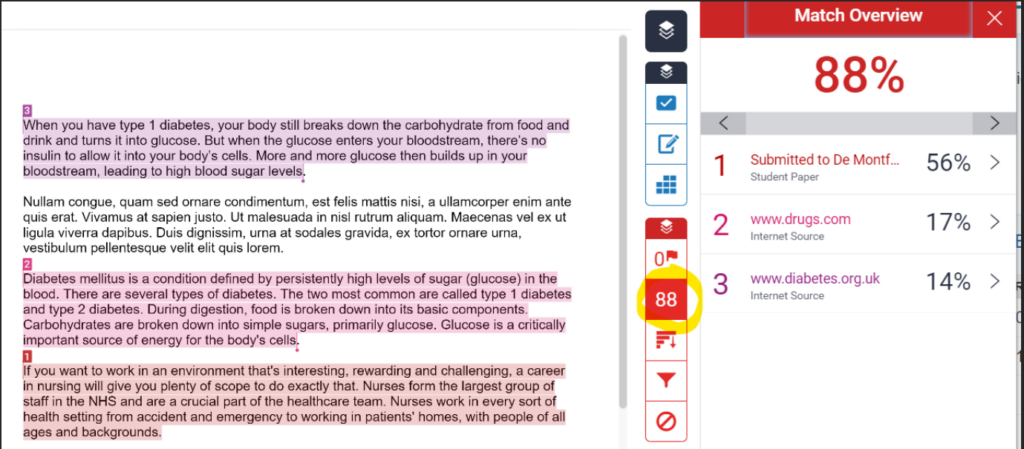

Turnitin is a plagiarism-prevention tool that helps check the originality of student writing. On April 4th, Turnitin released an AI-detection feature.

According to Turnitin, its detection tool works a bit differently.

When a paper is submitted to Turnitin, the submission is first broken into segments of text that are roughly a few hundred words (about five to ten sentences). Those segments are then overlapped with each other to capture each sentence in context.

The segments are run against our AI detection model, and we give each sentence a score between 0 and 1 to determine whether it is written by a human or by AI. If our model determines that a sentence was not generated by AI, it will receive a score of 0. If it determines the entirety of the sentence was generated by AI it will receive a score of 1.

Using the average scores of all the segments within the document, the model then generates an overall prediction of how much text (with 98% confidence based on data that was collected and verified in our AI innovation lab) in the submission we believe has been generated by AI. For example, when we say that 40% of the overall text has been AI-generated, we’re 98% confident that is the case.

Currently, Turnitin’s AI writing detection model is trained to detect content from the GPT-3 and GPT-3.5 language models, which includes ChatGPT. Because the writing characteristics of GPT-4 are consistent with earlier model versions, our detector is able to detect content from GPT-4 (ChatGPT Plus) most of the time. We are actively working on expanding our model to enable us to better detect content from other AI language models.

The Issues

AI detectors cannot prove conclusively if text is AI generated. With minimal editing, AI-generated content evades detection.

L2 writers tend to write with less “burstiness.” Concern about bias is one of the reasons for UBC chose not to enable Turnitins’ AI-detection feature.

ChatGPT’s writing style may be less easy to spot than some think.

Privacy violations are a concern with both generators and detectors as both collect data.

Now what?

Langara’s EdTech, TCDC, and SCAI departments are working together to offer workshops on four potential approaches: Embrace it, Neutralize it, Ban it, Ignore it. Interested in a bespoke workshop for your department? Complete the request form.

References

Marcus, G. (2023, January 6). Ezra Klein interviews Gary Marcus [Audio podcast episode]. In The Ezra Klein Show. https://www.nytimes.com/2023/01/06/podcasts/transcript-ezra-klein-interviews-gary-marcus.html

Fowler, G.A. (2023, April 3). We tested a new ChatGPT-detector for teachers. If flagged an innocent student. Washington Post. https://www.washingtonpost.com/technology/2023/04/01/chatgpt-cheating-detection-Turnitin/

A bit about how AI classifiers identify AI-generated content

A bit about how AI classifiers identify AI-generated content